Analysis of UAV evasion technology and perception technology Leave a comment

An unmanned aerial vehicle (UAV) is an aerial vehicle piloted by an unmanned aerial vehicle, usually controlled by radio remote control or its own program. Since there is no need to install pilot-related equipment, UAVs not only have the common features of large airspace and fast movement of commonly used aerial vehicles, but also have the characteristics of small size, light weight, good concealment, and strong adaptability.

In the military field, UAVs can perform various tasks such as reconnaissance, early warning, communications, precision strikes, combat support, rescue, supplies and even suicide attacks all weather and airspace, and their role in modern warfare is becoming more and more significant. In the civil field, UAVs can also be widely used in aerial photography, police, urban management, agriculture, geology, meteorology, electricity, emergency rescue and disaster relief and other fields [1].

However, the flight safety problems that restrict the promotion and application of UAVs in various fields of military and civilian use are very prominent. With the large-scale use of drones, the airspace at mid-to-low altitudes and ultra-low altitudes is becoming more and more “crowded”, and the risk of collisions between unmanned aerial vehicles and other objects is increasing day by day, causing great safety hazards [2].

Since there are no pilots on the UAV to undertake obstacle detection and avoidance responsibilities, the UAV system can only rely on airborne sensors to complete obstacle avoidance. This process is “sensing and avoiding”, and the system that undertakes this function is called “Perception and Avoidance System”.

Perception and avoidance systems are essential to the autonomous flight safety of UAV systems. Countries all over the world have recognized that autonomous sensing and avoidance technology of UAVs is a key factor to promote the development of UAV applications [3]. On October 25, 2017, the United States launched The Unmanned Aircraft Systems Integration Pilot Program, which aims to quickly integrate UAV systems into the national airspace. UAV sensing and avoidance capabilities are the project. One of the key assessment subjects.

1. UAV autonomous sensing and avoidance system

“Perception and avoidance” means that the drone can detect whether there are obstacles (including stationary objects or other moving aircraft) in its safe neighborhood or airspace surveillance range, by analyzing the relative motion state of itself and the obstacle, The operator may automatically make analysis and decision-making, thereby eliminating the potential collision hazard [4].

The autonomous perception and avoidance system of UAV usually includes three parts: perception system, decision-making system, and route planning system [5]. The working process is generally: first detect whether there is an obstacle through the perception system, and when there is an obstacle, detect the obstacle The distance, angle, speed and other information of the object; then the decision-making system judges whether the obstacle affects flight safety according to the information obtained by the perception system, and decides whether the route needs to be re-planned; if re-planning is required, the route planning system By integrating local and external information, the route is adjusted to avoid collisions. The whole process is shown in Figure 1.

Figure 1 Flow chart of the perception and avoidance system

2. Perception systemThe perception system is the first link in the UAV perception and avoidance system. It needs to detect and obtain obstacle information to provide support for the decision-making system. Perception systems can be divided into two types: cooperative and non-cooperative.

2.1 Cooperative perception system

Cooperative sensing systems require airborne vehicles to carry coordinated sensors to complete target detection, such as aircraft traffic alert and collision avoidance system (TCAS), broadcast automatic correlation monitoring system (ADS-B), etc.

(1) Aircraft Traffic Alert and Collision Avoidance System (TCAS)

TCAS is an independent traffic collision avoidance and warning system. The aircraft needs to be equipped with an A/C interrogation transponder and a TCAS antenna. TCAS will actively send out the interrogation signal. When the transponder of other aircraft receives the interrogation signal, it will transmit the response signal; after the interrogator receives the response signal, the TCAS processor calculates the distance based on the time interval between the transmitted signal and the response signal. The antenna points to determine the azimuth, thereby determining the location of the target aircraft. However, because the detection range of the TCAS system is limited to the communication of the aircraft loaded with a transponder, and is limited by the size of the TCAS antenna, the angle measurement error is relatively large, and the TCAS system alone cannot complete the task of UAV airspace obstacle sensing.

(2) Broadcasting automatic dependent monitoring system (ADS-B)

Automatically related surveillance system (ADS-B) is a surveillance system mounted on an aircraft. It uses global satellite positioning system (GPS), inertial navigation system (INS) and other airborne avionics to obtain the aircraft’s four-dimensional position information (Longitude, latitude, altitude, time), as well as the aircraft’s own status information (speed, direction, etc.) and other data are sent out in a broadcast mode, and provided to the surrounding aircraft or ground users to receive and display.

ADS-B system can not only monitor the aircraft loaded with ADS-B equipment in the airspace, but also can obtain the weather, terrain, airspace restrictions and other flight information of the flying airspace through cross-linking with the air traffic control system (ATC) [6]. Therefore, based on the flight regulations, ATC information, and detailed information of other aircraft in the airspace provided by the ADS-B system, UAV path planning, airspace target awareness, threat assessment, conflict avoidance, etc. can be realized. In addition, the ADS-B system can incorporate drones into the supervision and management of ATC, which is conducive to the safety of flight airspace. The International Civil Aviation Organization (ICAO) has identified it as the main direction for the development of airspace regulatory technology in the future, and the international aviation community is actively promoting the application of this technology.

2.2 Non-cooperative perception system

When information interaction between the carrier and obstacles is not possible, autonomous detection is required through a non-cooperative sensing system, which usually uses radar, laser, and photoelectric sensors.

(1) radar

The radar system is an active detection device that uses the reflection of electromagnetic waves to detect obstacles. The electromagnetic echo contains information such as the direction and size of the obstacle and the relative distance of the target. It has the characteristics of wide detection range, all-weather, all-weather work However, the radar system is usually large in size and power consumption, which is not suitable for small and medium UAV systems, and the ability to accurately identify targets is limited.

(2) Laser

The laser sensor has the advantages of high precision and good unidirectionality. The airborne laser sensing system emits a laser beam to the target, and compares the received reflected beam with the emitted beam to obtain the target distance. After further processing, the target position can be obtained. , Speed, shape and other parameters. Laser sensors are not susceptible to airflow, but are susceptible to interference from smoke, dust, and raindrops. At present, laser sensors have certain applications in military and civilian sensing and obstacle avoidance systems.

Lidar has a simple structure, fast speed and light weight. In Document 7, the author analyzed the URG-04LX radar of Hokuyo Company in Japan: URG-04LX has a working band of 785nm, a scanning radius of 240° sector area of 4m, an angular resolution of 0.36°, a distance measurement accuracy of 10mm, and a The angle correspondence estimation algorithm (Polar Scan Matching, PSM) can obtain the relative position and attitude information of the quadrotor UAV in complex situations. The algorithm has fast convergence speed and short iteration time. In addition, classic algorithms also include Iterative Closest Point (ICP), Iterative Dual Correspondence (IDC) and so on. Among them, PSM algorithm is faster than ICP algorithm in part of the three-dimensional space.

The above algorithms are all path planning methods based on the idea of real-time localization and map construction (Simultaneous Localization Mapping, SLAM), and when applied to randomly appearing moving objects, it can also be directly based on the imaging point cloud to perform servo actions to avoid without Iteratively calculate the relative position of the drone.

(3) Optoelectronics

The photoelectric sensor uses a photoelectric element to convert the light signal of the target scene into an electric signal. It has the characteristics of passive and passive, and the acquired image contains rich detailed information. It is the most intuitive sensor system for the operator. The current military large-scale UAV non-cooperative target sensing and avoidance system is an indispensable sensor [8]. The high-resolution visible light CCD camera has a long range, high resolution, and good concealment. It is the first choice for UAVs to detect obstacles, but it cannot work around the clock, so the current mainstream airborne optoelectronic system usually contains a high High-resolution visible light CCD sensor and a lower-resolution infrared thermal imager. Infrared thermal imaging cameras can complete all-weather detection and identification of obstacles according to the differences in various targets and background radiation characteristics.

Because the image acquired by a single photoelectric sensor loses the depth information and cannot directly obtain the distance information of the target, it is necessary to use multiple sensors to obtain accurate information of obstacles by using stereo vision, or to use it with laser and radar sensors[9 ].

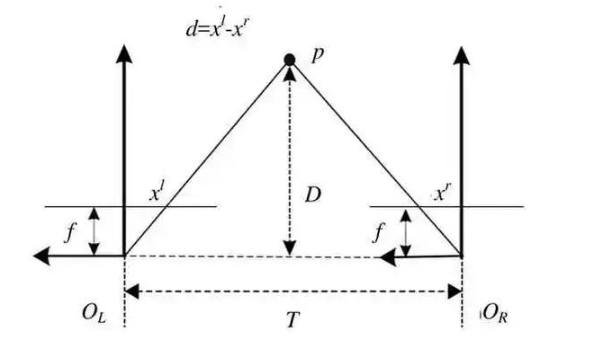

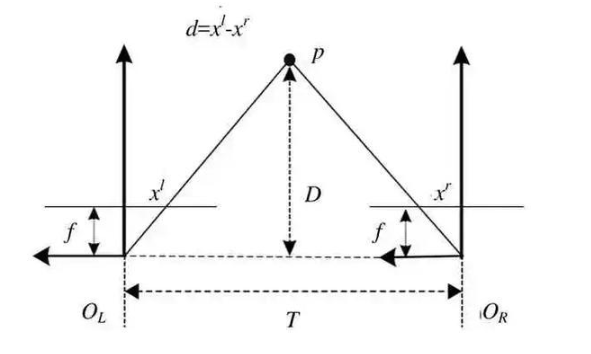

Figure 2 Principle of binocular vision algorithm Figure 2 shows the basic principle of binocular vision algorithm, where P represents the target, OL and OR represent the optical center of the lens, f represents the focal length of the lens, and D represents the connection between the target and the two lenses Vertical position, T represents the baseline distance, d represents the parallax value of the left and right lenses, d = xl-xr. The target distance can be calculated by the formula D = T f/d.

The biggest advantage of binocular vision lies in the three-dimensional matching technology [10], which can be used to feel the depth and distance information of the scene in the space. Therefore, algorithms related to binocular vision emerge in endlessly. In Reference 11, the author discusses some theoretical methods of optimal estimation. Matching algorithms can generally be divided into local stereo matching algorithms and global stereo matching algorithms. The local stereo matching algorithm is a window-based algorithm, and the local features of the image are selected as the basis for optimal estimation; the optimization basis of the global stereo matching algorithm is not limited to the window. Among them, three-dimensional matching based on dynamic programming (Dynamic Programming) and three-dimensional matching (Graph Cuts) based on spinous process graph cuts are more representative.

However, the high-precision positioning and navigation that these algorithms can achieve rely heavily on the accuracy of the image camera, the high accuracy and real-time performance of the stereo matching algorithm, and the accuracy of ranging and target recognition. There are still no small challenges.

DJI’s Phantom 4 drone uses stereo vision to realize perception and avoidance. The drone is equipped with two sets of binocular stereo vision systems, one looking forward and one looking down, a total of 4 cameras. A set of binocular stereo vision system looking down can detect the three-dimensional position of objects on the ground below and calculate the accurate flying height of the drone; a set of binocular stereo vision system looking forward can be used to detect objects in the front scene Depth, generate a depth map for obstacle perception.

2.3 Multi-source information fusion perception system

Effective drone perception systems usually contain multiple sensors. Multi-source information fusion can perform multi-level, multi-level and comprehensive processing of data from multiple sensors to obtain the best description of the environment. Various perception systems on the drone can obtain partial information of obstacles, and through multi-source information fusion, various real-time or non-real-time, rapid or gradual changes, fuzzy or accurate, similar or contradictory sensor information with different characteristics, The measurement data, statistical data, and empirical data are fused, and the redundant or complementary information in space or time is processed by clustering or Kalman filtering to obtain a consistent explanation or description of potential air threat targets.

The multi-source information fusion sensing system integrates drone navigation information, radar/photoelectric data, TCAS, ADS-B information, and effectively uses the complementarity of multi-sensor resource information, so as to obtain more potential threats in the air. Comprehensive information to ensure safer and more reliable evasion.

3. Decision system

The decision-making system is mainly responsible for judging the threat of obstacles to itself based on the information provided by the perception system. Commonly used judgment basis include:

(1) Relative height difference;

(2) Relative distance;

(3) The rate of change of relative distance, that is, relative speed;

(4) Reserved time, which is the quotient of relative distance and relative speed.

Reserving time is very important. According to the regulations of the air traffic management system, the obstacle threat level can be divided into 4 levels [4].

(1) Other traffic target levels: the relative distance is greater than 6 nautical miles or the relative altitude difference is greater than 1200 feet, there is no risk of collision;

(2) Approaching traffic target level: the relative distance is less than 6 nautical miles or the relative height difference is less than 1200 feet, there is no risk of collision;

(3) Traffic alert level: The reserved time is 35~45s, there is a potential collision risk, and an avoidance decision must be made in advance;

(4) Avoidance decision level: The reserved time is 20-30s, there is a potential collision risk, and an avoidance decision must be made.

4. Route planning system

Route planning based on the purpose of obstacle avoidance refers to the process of determining the optimal path based on the movement state of the threat target obtained by the UAV based on the sensing information, as well as the collision avoidance point and the collision reserve time, while considering the fuel and the maneuvering characteristics of the UAV. . Its essence is a kind of multi-constraint optimization problem, and there are many kinds of algorithms. Artificial potential field method is a widely used algorithm.

Artificial potential field method is a virtual force field method. It constructs a potential field in the task space by introducing the concept of physics midfield. In the potential field, the UAV moves to the target point by the gravitational force of the target position, and the role of obstacles on the UAV is the repulsive force field to prevent it from colliding with the obstacle. Search for a collision-free and safe path along the direction of the potential field function’s decline. The force acting on the drone is the resultant force of gravitation and repulsion. The direction of movement of the drone is determined according to the resultant force, and the position of the drone is calculated at the same time. The artificial potential field algorithm is simple and fast to calculate, and is suitable for dynamic and static obstacle environments [12].

Compared with other three-dimensional route planning algorithms, it has significant advantages: First, when planning a route, the artificial potential field method only needs to calculate the resultant force at the current position based on the force field, and plan the obstacle avoidance in combination with the current UAV motion state. Its most notable feature is its small amount of calculation and fast calculation speed. Secondly, the artificial potential field method can be used to obtain a smooth and safe route, while other route planning algorithms not only need to perform smooth operations on the route, but may also need to re-test the minimum direct flight distance, maximum climb angle and other flight safety performance tests.

5. Summary and Outlook

UAV autonomous perception and avoidance technology involves sensor design, signal and information processing, environmental perception, target detection/recognition and tracking, obstacle threat assessment, route planning and many other fields, as well as airspace management, flight safety planning and other policies and regulations , Is a complex system engineering. With the widespread application of UAVs in the military and civilian fields, autonomous perception and avoidance capabilities will become indispensable functions for UAVs.

At present, cooperative sensing systems and non-cooperative sensing systems have been greatly developed. TCAS can obtain more and more accurate information. Both radar and photoelectric can detect farther and more accurately. The real-time fusion of multi-source data will It is the main development direction of the next stage.

The route planning system is mainly derived from the information of the perception system, so how to make full use of the characteristics of the various sensors of the perception system is the next problem to be solved.

From the perspective of the overall structure of the UAV perception and avoidance system, the external information is first obtained and processed by the sensors, and then the processed information will be transmitted to the decision-making system for judgment, and finally the instructions issued by the decision-making system will be transmitted to the route planning system . In the whole process, the delay of information transmission in any link may reduce the overall performance of the perception and avoidance system. Therefore, how to improve the robustness of the perception and avoidance system to information transmission delay is also very important.

From the point of view of the overall system function, the autonomous sensing and avoidance system of UAV should mainly develop in the following directions to improve the degree of intelligence of the system.

(1) The types of obstacles that can be avoided are wider;

(2) Can adapt to various use environments;

(3) Shorten the system’s judgment cycle to avoid sudden maneuvers of the UAV.